Reconstructing complex 3D scenes and synthesizing novel views has seen rapid progress in recent years. Neural Radiance Fields demonstrated that continuous volumetric radiance fields can achieve high-quality image synthesis, but their long training and rendering times limit practicality. 3D Gaussian Splatting (3DGS) addressed these issues by representing scenes with millions of Gaussians, enabling real-time rendering and fast optimization.

However, Gaussian primitives are not natively compatible with the mesh-based pipelines used in VR headsets, game engines, and real-time graphics applications. Existing solutions attempt to convert Gaussians into meshes through post-processing or two-stage pipelines, which increases complexity and often degrades visual quality.

In this work, we introduce Triangle Splatting+, which directly optimizes triangles, the fundamental primitive of computer graphics, within a differentiable splatting framework. We reformulate triangle parametrization to enable connectivity through shared vertices, and we design a training strategy that enforces opaque triangles. The final output is immediately usable in standard graphics engines without post-processing and high-visual quality.

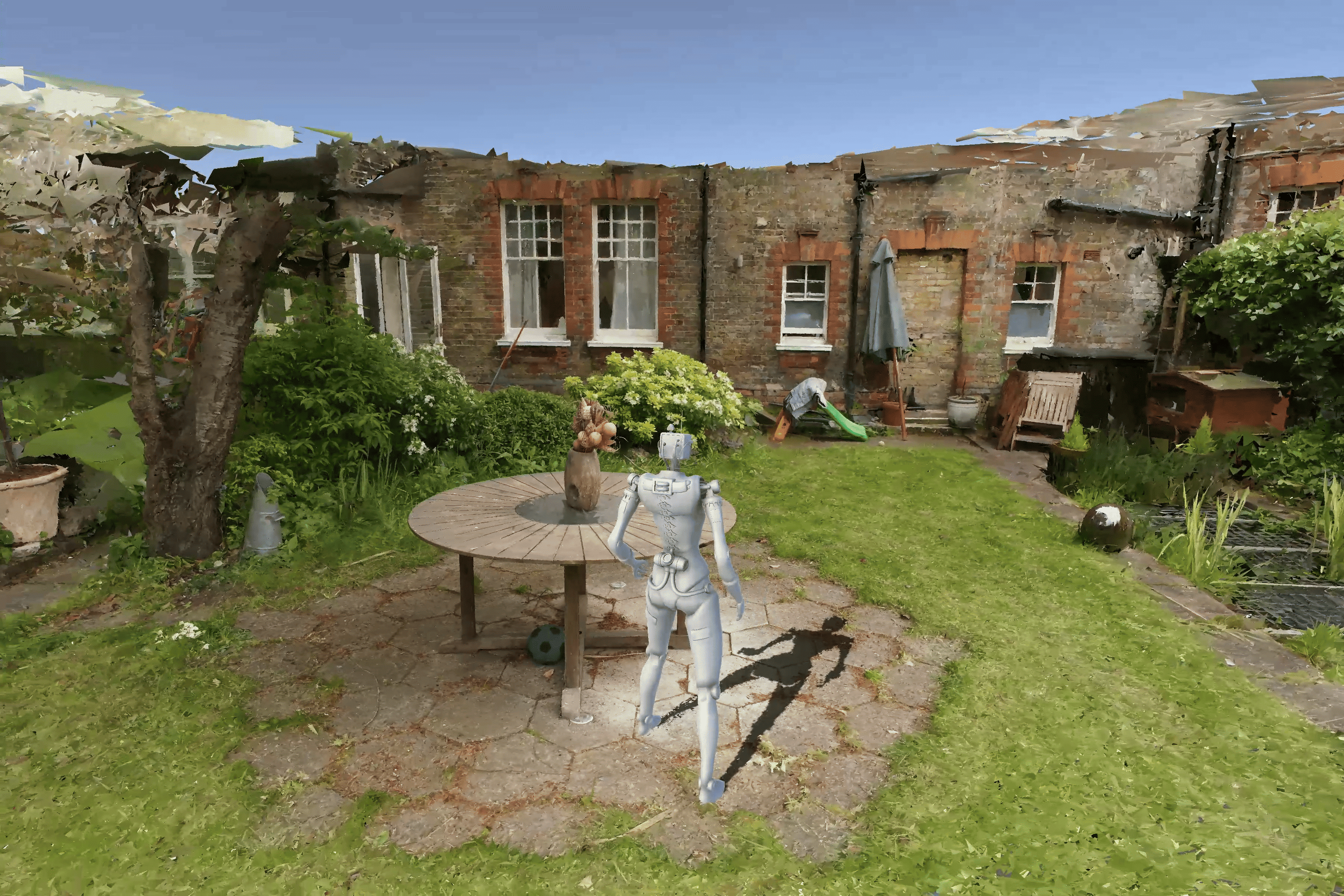

Experiments on the Mip-NeRF360 and Tanks & Temples datasets show that Triangle Splatting+ achieves state-of-the-art performance in mesh-based novel view synthesis. Our method surpasses prior splatting approaches in visual fidelity while remaining efficient and fast to training. Moreover, the resulting semi-connected meshes support downstream applications such as physics-based simulation or interactive walkthroughs.

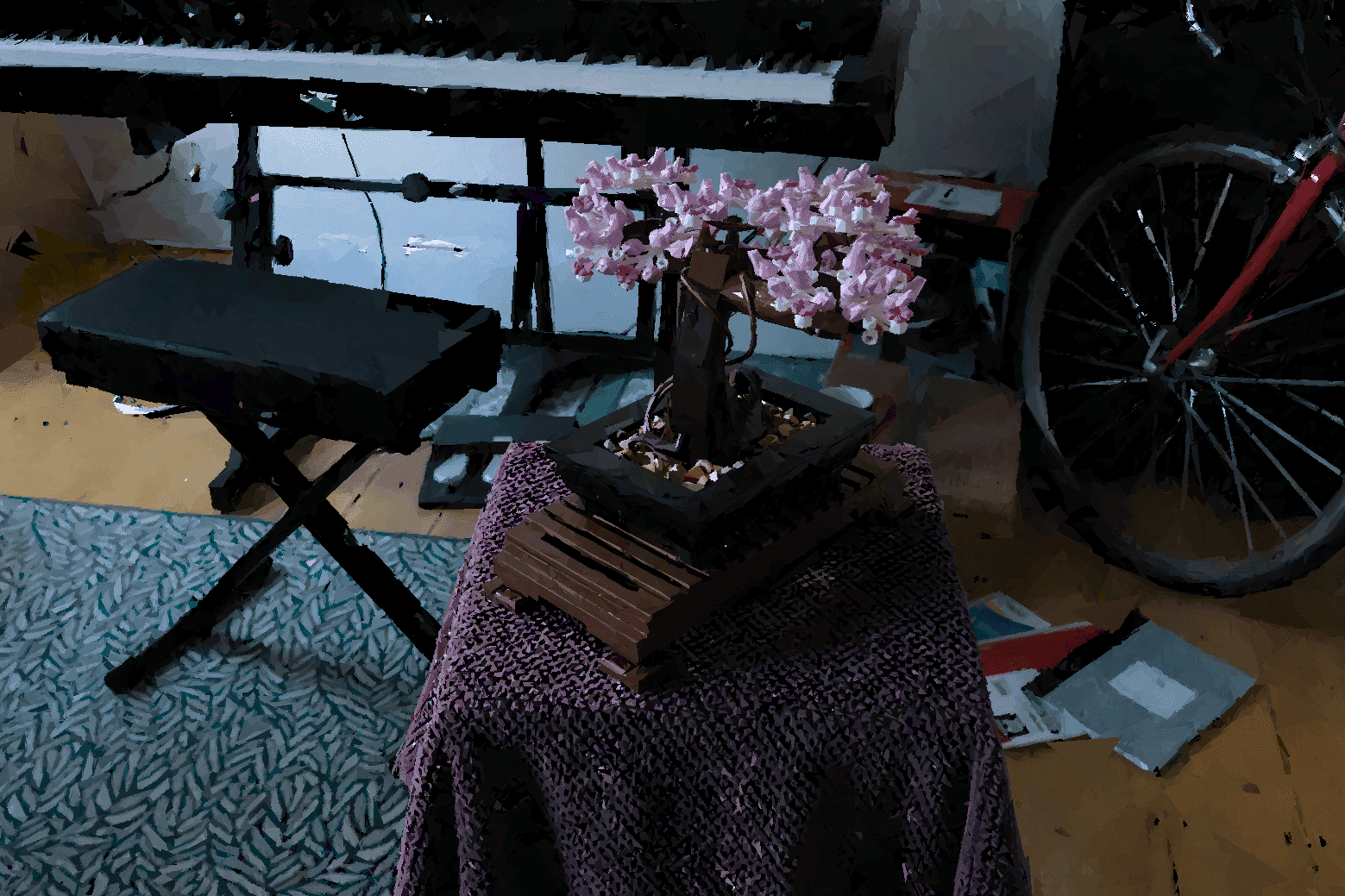

Triangle Splatting+ delivers high-quality novel view synthesis with fast rendering, while accurately reconstructing fine details, as demonstrated in the Bonsai scene. By relying solely on opaque triangles, the final output can be directly used in game engines and mesh-based renderers without post-processing.

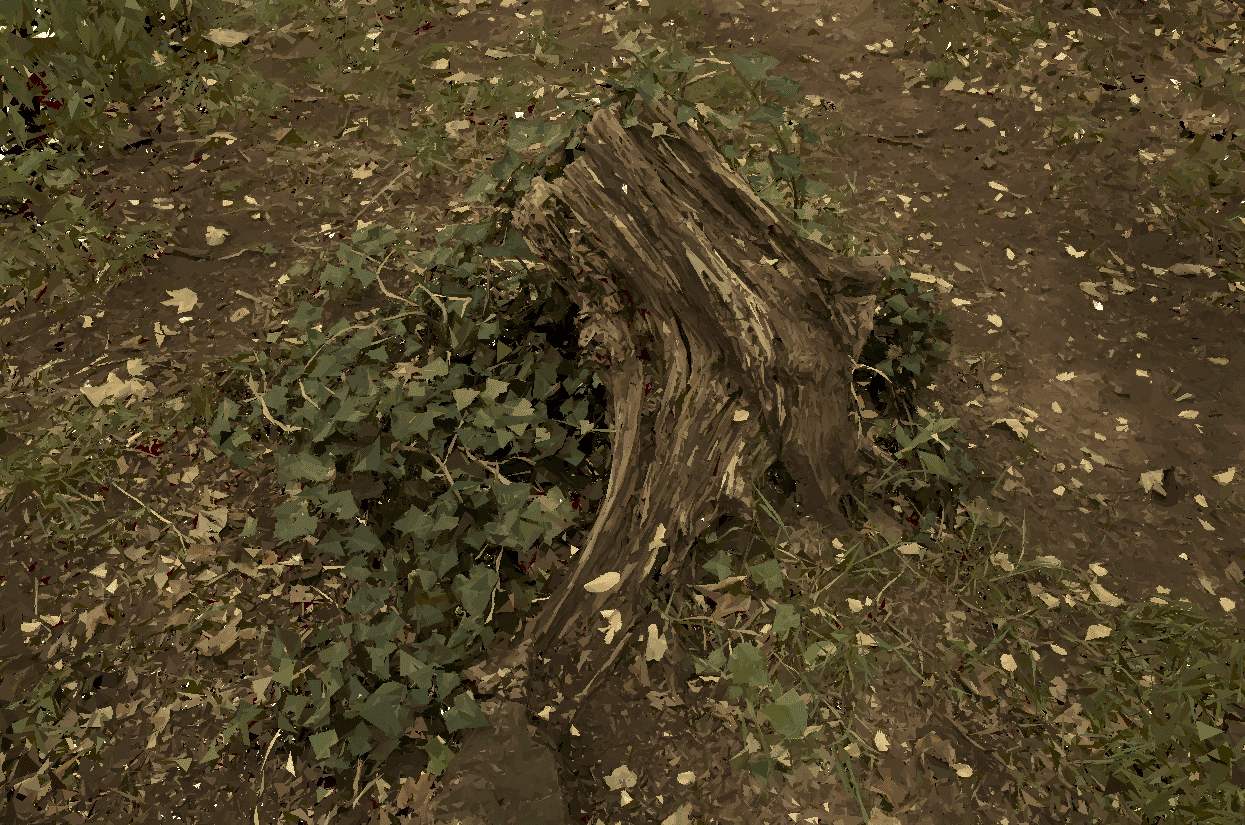

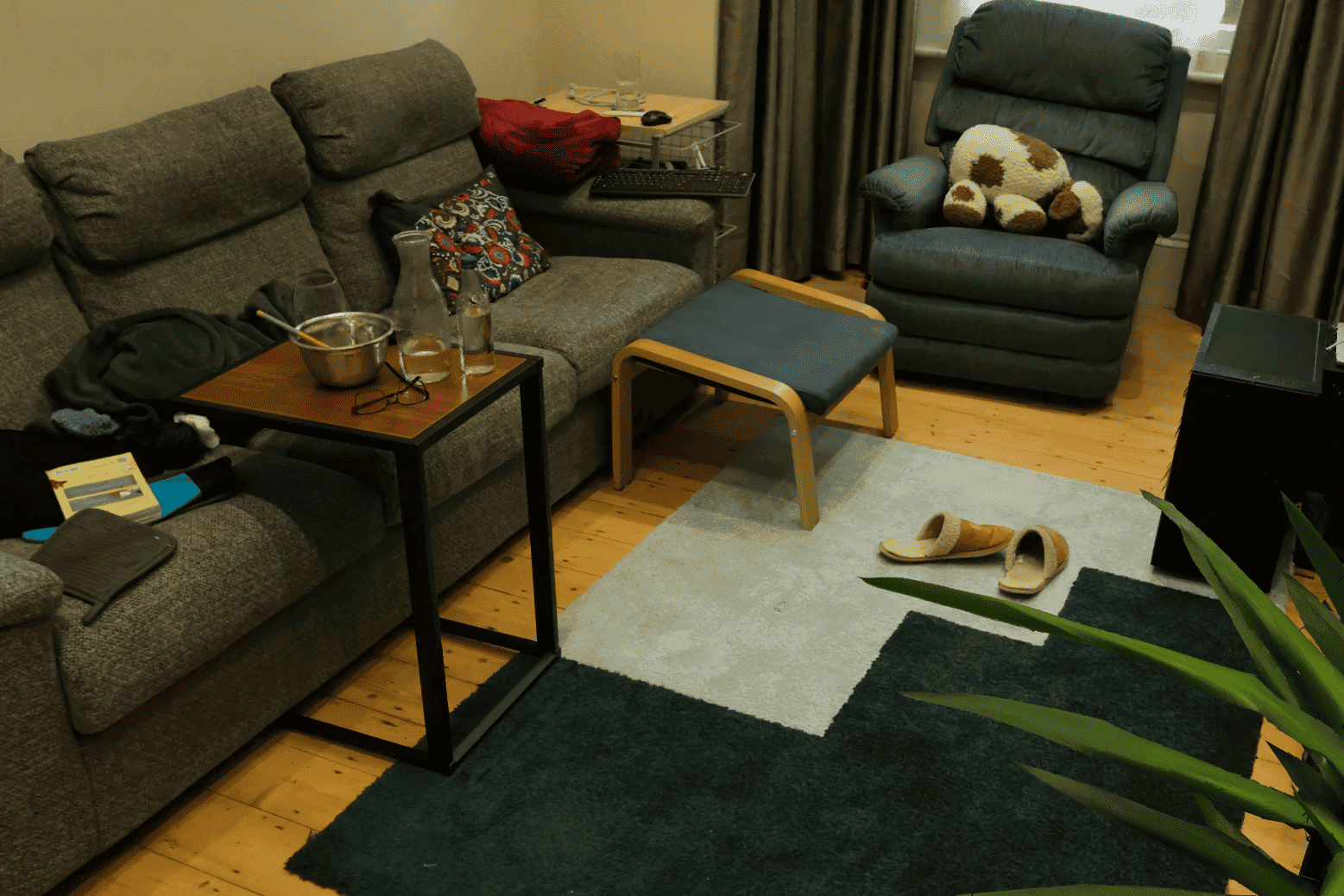

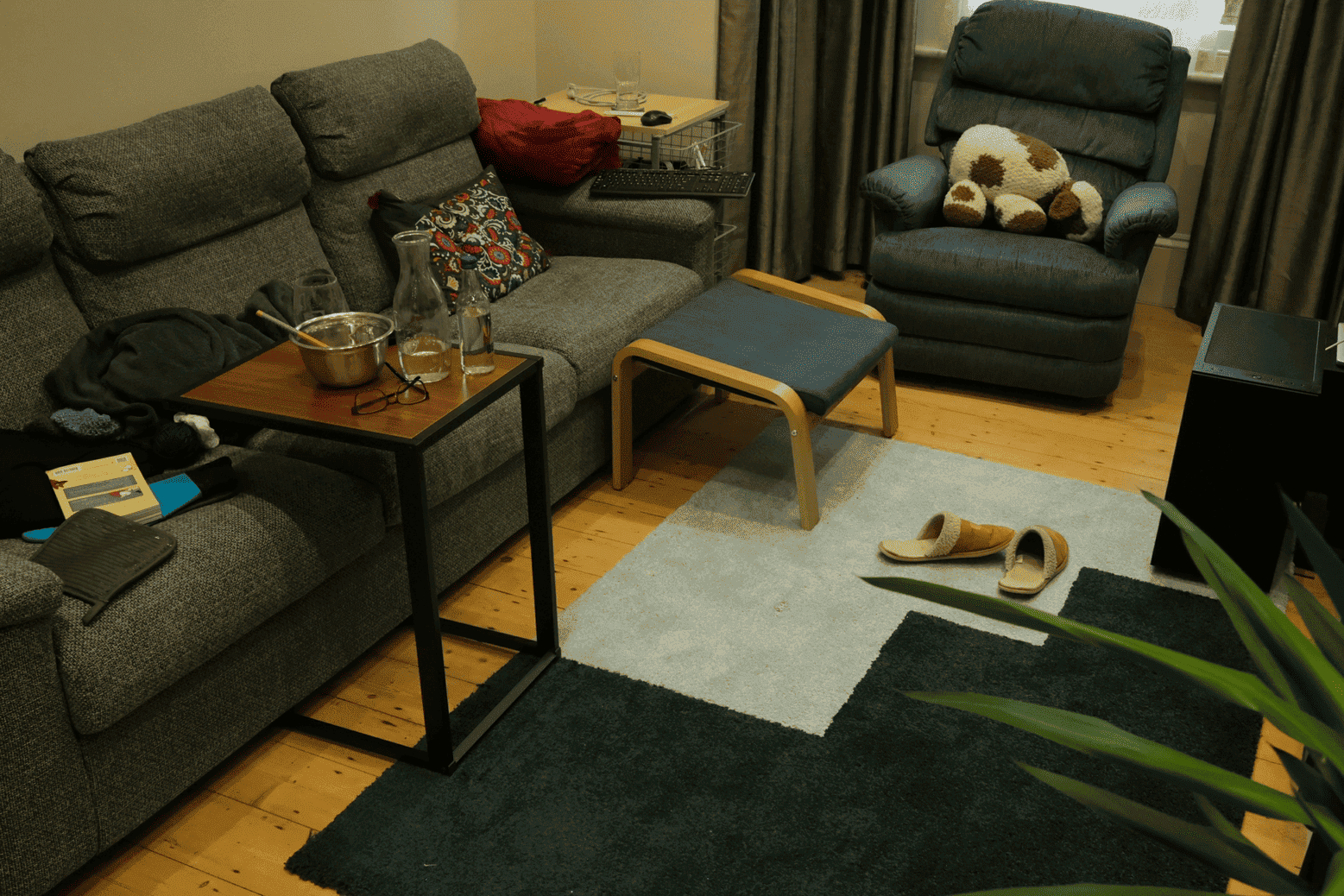

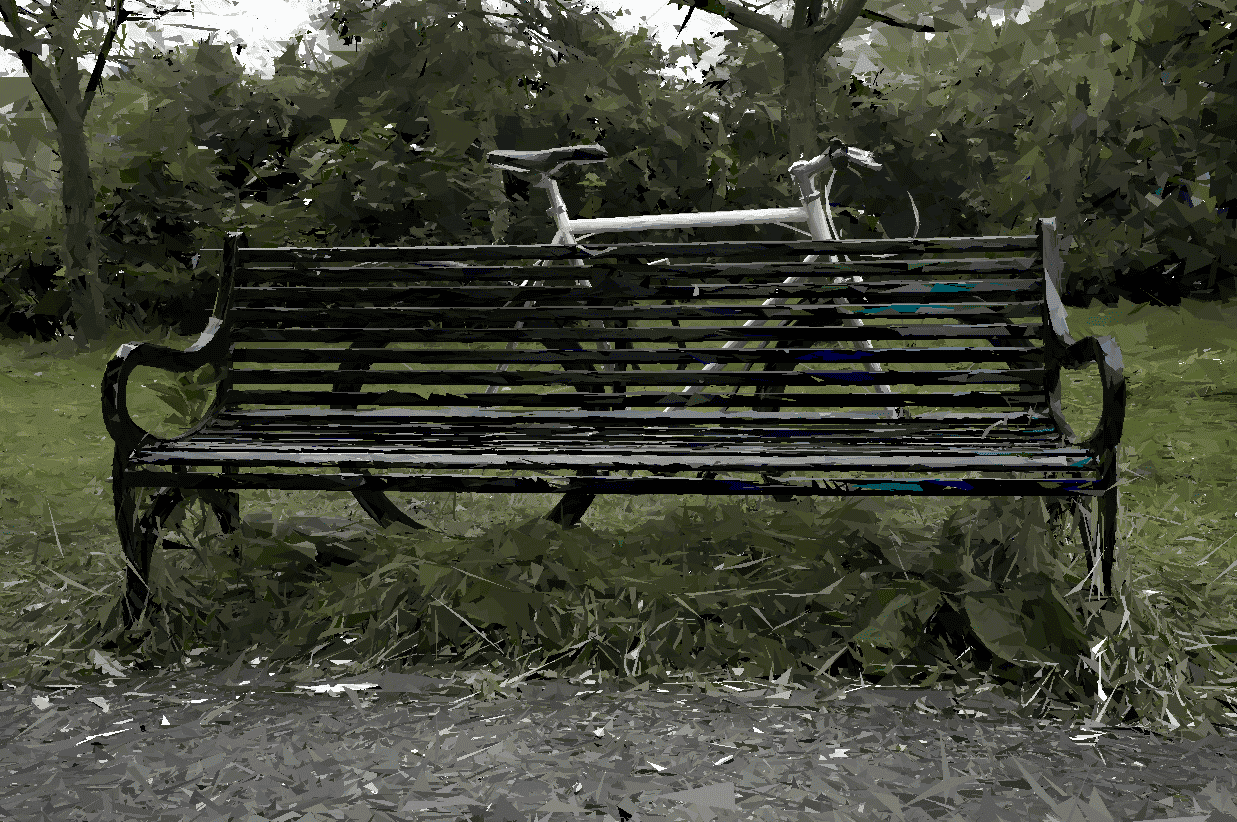

Triangle Splatting+ (1) enables fast training, both scenes (upper row) were optimized in under 25 minutes, (2) requires little memory, final output files are below 40 MB without any compression, and (3) still achieves high visual quality.

Garden - 43MB - 35min training time

Bicycle - 51MB - 35min training time

Truck - 30MB - 25min training time

If you want to run some scene on a game engine for yourself, you can download the Garden, Bicycle or Truck scenes from the following link. To achieve the highest visual quality, you should use 4× supersampling.

If you want to try out physics interactions or explore the environment with a character, you can download the Unity project from the link below: link. To achieve the highest visual quality, you should use 4× supersampling.

@article{Held2025Triangle+,

title = {Triangle Splatting+: Differentiable Rendering with Opaque Triangles},

author = {Held, Jan and Vandeghen, Renaud and Son, Sanghyun and Rebain, Daniel and Gadelha, Matheus and Zhou, Yi and Lin, Ming C. and Van Droogenbroeck, Marc and Tagliasacchi, Andrea},

journal = {arXiv},

year = {2025},

}